The same month that Congress approved the federal American Rescue Plan that would send billions in Covid-relief aid to public schools, North Carolina’s State Superintendent Catherine Truitt hired Michael Maher to launch a new office dedicated to academic recovery. The goal was to help school districts make smart decisions about using the Elementary and Secondary School Emergency Relief (ESSER) funds and to provide the research and evaluation that could assess the money’s impact. FutureEd Associate Director Phyllis Jordan spoke with Maher, a N.C. State University assistant dean turned deputy state superintendent, about how North Carolina is tracking the unprecedented infusion of federal aid.

You’ve described North Carolina as data-rich and information-poor. Can you explain what you mean by that?

North Carolina has a long history of testing and accountability. We go all the way back into the 1990s, where we were doing testing in almost every grade, almost every subject, all in a single student information system. So we have a ton of student-level data, student performance data, and it’s all linked up in one place. And it’s available externally to researchers from across the country. If you read any number of journal articles, there are a lot of studies using North Carolina data. We’ve got a bunch of universities, including some pretty big research universities in the state, that regularly work with us and use that data. So why are we information-poor? Historically, what happens is people come and get the data, and they publish articles. But none of that information makes it back to the state agency. We have not had the opportunity to use it to drive program improvement.

How are you using federal Covid-relief aid to change that?

We used some of our Covid money to set up the Office of Learning Recovery & Acceleration. And part of that office, part of the mission, is to evaluate the impact of interventions that we put in place using Covid dollars. But we also used it to set up some infrastructure. We’ve got a quantitative methodologist, a qualitative methodologist, and a director of research who has a background and a history in program evaluation. Now, we’re running our own research and evaluation inside the building. But we are also doing some more partnership work with external researchers. And what we have been asking of those external partners is to provide real-time data as information becomes available. Rather than holding the findings for publication, we’re asking them to release some of the preliminary results to us in the form of a policy brief or research brief that we can share both internally or externally with our school partners to drive continuous improvement.

One of your first research efforts was a lost instructional time analysis. How did you measure the impact of the pandemic on your students?

That was a project we did in collaboration with the SAS Institute. North Carolina uses the SAS Institute’s EVAAS data (Education Value-Added Assessment System), and we worked with the SAS team to estimate a projected growth score for every student by tested subject and then compared the projected score to the actual growth score and measured the difference. We ended up with effect sizes for every tested subject and every tested grade level. And then we converted those effect sizes to months of learning.

What were your findings?

We found that, for every subject, every demographic group, we saw a negative impact in the 2020-21 school year. But it varied. For Math I, a middle or early high school class, it’s a 15-month loss, a huge loss versus a couple of months in early grades reading. It varies from there, but from a high-level perspective, math, and in particular middle-grade math, was our most impacted subject.

What are you doing to address that?

We’ve got a $36-million program that we are launching next month across the state for school districts to run middle grade’s math-specific interventions. The districts actually have some options. They can use third-party vendors. They can do tutoring if they choose. We’re going to see what districts do and then evaluate the impact of what they do.

North Carolina has an unusual circumstance, where the state legislature took control of the state share of the federal ESSER funds and decided how to dole it out. Has that complicated your job?

For sure, it complicates our work. The way it unfolded was my office led the effort at developing a budget for the $350 million reserved for the state education agency. We brought the budget to the state superintendent and then the State Board of Education. Everyone signed off on our proposed spending plan. Then we walked back across the quad to the General Assembly, and presented it to House and Senate leaders. And they appropriated it in some ways in which we recommended but then in ways that we did not recommend.

For example, we recommended a few larger categories of spending including a “promising practices” fund where we could further invest in and test innovations. We also recommended no direct funding for any specific vendors, but there were a few that did receive an appropriation.

Are you tracking how well those programs are working?

We are tracking and we are evaluating all of those programs. So if you got ESSER funds in North Carolina and you are a vendor of some sort, we are evaluating your program.

What are some of the big questions you’re asking either in your internal research or with your external partners?

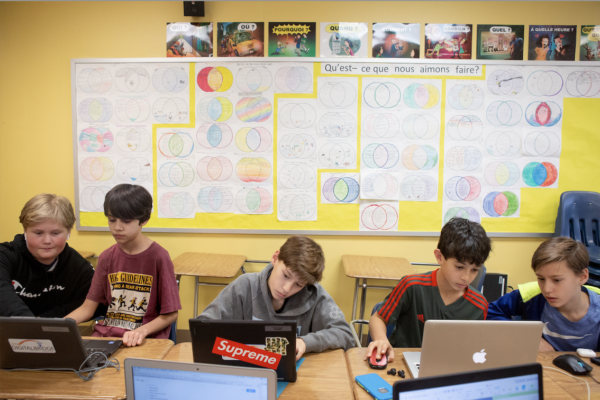

We’re looking at the impact of interventions. We’ve got some 30 different interventions in place, including summer learning programs, after-school programs. Some are vendors specific. Some are not. We’ve invested $13.5 million in tutoring with the North Carolina Education Corps We’re running some STEM programs, including a program called BetaBox in which the company bring trailers to schools with STEM activities. We’re doing LEGO robotics, for example. We did a career-accelerator program where we’re actually collecting both workforce credentials for students, but also doing things like career-development planning for middle school students. So we’re thinking about workforce transition with some kids. We’re doing a little bit of a lot of different things, testing what works, investing in those things.

In the end do you think you’ll have a sense of the impact all this federal money has had?

We have a $6-million request for proposals that we released in partnership with another group here in North Carolina. And one of the things we’re looking at is a total return-on-investment study. So we’ll see. We may end up with very good data about how this money was spent and the impact, or we may end up like most other states and be, “We kind of know.”

How do you make all this data you’re collecting actionable for your school districts? How are you going to communicate, “Here’s what’s working, and here’s what’s not”?

In several ways. We actually started a blog series, with our first blog post back in March or April. Every month, we’ve got a new blog and white paper that is essentially the data we’ve collected and an analysis of that data. And the blogs, in particular, are written at a level that practitioners can digest. These are not research papers. We want people like parents, community members, stakeholders, legislators, to be able to read what we’re writing and interpret what we’re saying.

We also have a promising practices dashboard that is a map of the state. You can click on each of the counties, and you’ll see some of the things that the counties have put in place. We actually just convened all of our districts. We had them in-person in cohorts based on size and student demographics, and now we’ll be doing virtual meetings with them quarterly, leading up to another in-person gathering next summer. We tell them, “We’re going to talk to you about data, data use, but we’re also talking about interventions.” And then they have time to actually talk with each other about interventions.

Will you emerge with a proof of concept that tutoring works, that mental health support works?

I go back and forth on tutoring. I struggle with it a lot because, in concept, it’s the answer. But in reality, there aren’t enough tutors. The real bang for your buck is when you’ve got teachers or paraprofessionals tutoring during the course of the day. The effect size begins to wane once you get outside of that group. If you start moving to volunteers, you move to parents, community members, the effect size isn’t quite as big as people think it is. And there may be other interventions that are far more effective than what I’m getting for tutoring. Now, tutoring has other benefits, of course, right? Like having an adult in a relationship with a child. You can’t measure that. I don’t mean to be a tutoring critic.

Perhaps you’re a tutoring realist.

I want to be a realist about tutoring. You need to train your tutors. They need to be on the curriculum. They need high-quality materials. There’s lots of stuff that has to go in to make it really, really effective. And to do that at scale for a state is an incredible undertaking.